Machine World Foundation Model(WFM)

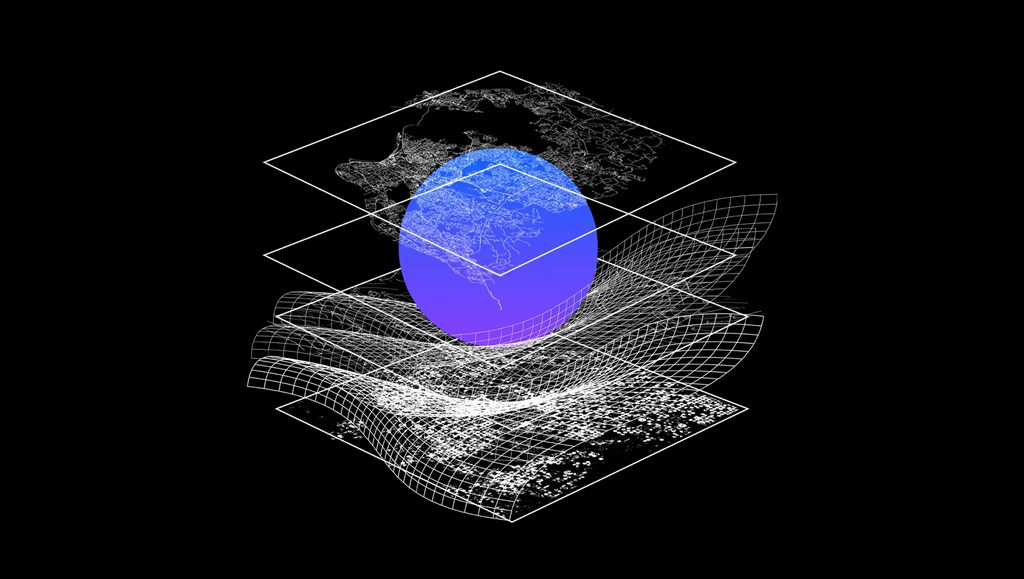

Imagine a single, massive 'brain' that has been trained by reading almost every book, viewing every public photo and video, and analysing a significant portion of the scientific data and code on the internet. It has not been trained to do one specific job. Instead, it has developed a deep, interconnected understanding of how our world works from language and culture to physics and biology. This general purpose, knowledge rich brain is the essence of a WFM. It is a large scale machine learning model, trained on a vast and diverse dataset encompassing multiple modalities of data (text, images, audio, video, sensor data, etc.).

Structure

Massive Scale: Trained on petabytes of data using immense computational power (thousands of high end GPUs, TPUs and others).

Multimodality: It understands and connects different types of information (modalities). It does not just process text or images; it understands the relationship between text and images, and potentially sound, video, and more.

General Purpose: Its knowledge is broad, not narrow. It can be adapted (fine tuned) for a wide range of downstream tasks without needing to be trained from scratch.

Emergent Abilities: These models exhibit capabilities that were not explicitly programmed, such as complex reasoning, chain of thought or theory of mind logic and creative generation.

The ultimate goal of a World Foundation Model is to create an AI that has a holistic understanding of our reality, enabling it to reason, plan, and solve complex, cross disciplinary problems in a way that mimics human like understanding.

Challenges

Bias and Safety: The model will learn all the biases present in its training data from the internet. Mitigating this is a huge challenge. Also input from humans ('Mechanical Turks') help support context that is too nuanced for AI at present.

Hallucinations: They can generate plausible but incorrect or fabricated information.

Immense Cost: The compute and energy requirements for training are enormous, limiting development to a few large organisations. More are appearing but whether they last is a moot point.

Explainability: It is often a ‘black box’, we do not know exactly how it arrives at its answers. Pressure from governments and other worried agencies may put pressure on change.

Control & Alignment: Ensuring that such powerful models goals are aligned with human values is one of the most critical research areas in AI. This is a can of worms as we are not sure what universal ethics are.

A WFM is not just a bigger AI model; it is a paradigm shift. It moves us from building thousands of specialised, single use tools to creating a single, versatile, and incredibly knowledgeable base intelligence that can be applied to almost any problem, ultimately aiming to capture the complex tapestry of our world in a digital form.

While both humans and LLMs can produce similar outputs, the processes driving those outputs are fundamentally different. Human cognition is rooted in comprehension, context, and sensory experiences, while LLMs excel in speed and pattern recognition across vast datasets. By understanding these differences, you can better use the strengths of both systems. Combining human insight with AI efficiency offers opportunities to achieve outcomes that neither could accomplish alone. This synergy has the potential to transform fields such as education, healthcare, and scientific research, where the unique capabilities of humans and AI can complement one another to solve complex challenges.

How the Brain Builds Its World Model

Predictive Processing (or Predictive Coding). This is the leading modern theory. The brain is not a blank slate that just reacts to stimuli. Instead, it is a prediction engine.

It generates predictions: Your brain constantly uses its pre existing world model (based on past experiences) to predict the sensory input it should be receiving.

It calculates prediction errors: It then compares these predictions to the actual sensory signals coming from your eyes, ears, etc. The difference is the ‘prediction error.’

It updates the model: The brain's primary job is to minimise prediction error. It can do this in two ways:

Update the model: "I was wrong. Let me change my model to better fit the data." (This is learning).

Act on the world: "I predicted that turning my head would make the bird move to the left of my visual field. I'll turn my head to make the prediction come true." (This is action).

Hierarchical Processing. The brain processes information in a hierarchy, from simple to complex.

Low levels: Process basic features (edges, lines, sounds frequencies).

Higher levels: Integrate these features into objects (a face, a voice).

Even higher levels: Create narratives, contexts, and meanings ("That is my friend Sarah, and she looks happy").

This hierarchy allows for the model to be both detailed and rich with meaning.

Multisensory Integration

The brain combines sight, sound, touch, smell, and proprioception (your sense of body position) into a single, unified model. The sound of a clap and the sight of hands coming together are fused into one event. This is why ventriloquists can ‘throw’ their voice to a dummy the brain priorities the visual model when there is a conflict.

The world model isn't just in your head; it's grounded in your body. Your model of a ‘chair’ is not just a visual image; it includes the motor programs for sitting, the sensation of hardness, and the feeling of rest. Your body is a fundamental part of the model.

How We Become Aware of the Model?

Unconscious Modelling: Your visual system processes edges and colours. Your brain's predictive model quickly suggests "cup." Your olfactory system picks up the scent, and the model is updated to "cup of coffee." This is all unconscious.

Error Minimisation: If the handle is on the wrong side, a small prediction error is generated, and you might look closer to resolve it.

Global Broadcast: This stable, multisensory model "cup of coffee" wins the competition and is broadcast globally in your brain. It's now available to your language system (so you can say "coffee"), your memory system (reminding you it's yours), and your motor system (to plan reaching for it).

Conscious Awareness: This global availability is your conscious experience of the coffee. You are now aware of it. According to some theories, a higher order thought ("I am seeing coffee") may also be present, solidifying the conscious feeling.

Embodied Interaction: Your awareness is not just visual. It includes the anticipation of the warmth, the weight, and the taste is part of the brain's predictive model that includes your body.

In conclusion, the human brain is aware of its world model because a select part of that constantly evolving, predictive model achieves a special state within the brain, whether we describe that as global broadcasting, becoming the object of a higher order thought, or being a highly integrated set of information. This process transforms mere data processing into a subjective, lived experience.